Home » Common Pitfalls in Automated Testing and How to Avoid Them

Synopsis: Automated testing can revolutionize how software development teams work, promising faster releases, fewer errors, and a safety net for complex projects. Yet, it’s far from a magic bullet. Teams frequently stumble into predictable traps when implementing it—test automation challenges that turn potential into frustration. In this post, we’ll unpack the most common mistakes teams make, from over-automation to flaky scripts, and offer practical, hands-on solutions to overcome these challenges in automation testing.

Software development thrives on efficiency, and automated testing seems like the golden ticket. It’s designed to save time, catch bugs early, and free up testers for more creative work. But here’s the reality: the path to effective automation is riddled with pitfalls. Whether it’s unrealistic expectations, sloppy planning, or technical missteps, these automation testing challenges can derail even the best intentions. Let’s dive deep into the most common pitfalls in automated testing for software development and explore how to avoid mistakes in automated testing processes with actionable strategies.

One of the biggest automation challenges teams face is the knee-jerk reaction to automate every test case under the sun. It’s an appealing idea—why not let machines handle it all? But not every test is suited for automation. So, what test cases cannot be automated? Think about exploratory testing, where human intuition uncovers edge cases, or one-off scenarios that won’t repeat. Then there’s the early-stage UI—shifting daily as developers iterate—which makes scripts obsolete before they’re even finished. Trying to automate these is like chasing a moving target with a blindfold on.

Take a real-world example: a team I worked with once automated a feature still in prototype phase. Two weeks later, the UI changed, and their scripts were useless. Hours wasted, morale tanked. This is one of the top challenges in automated testing—overreach without strategy.

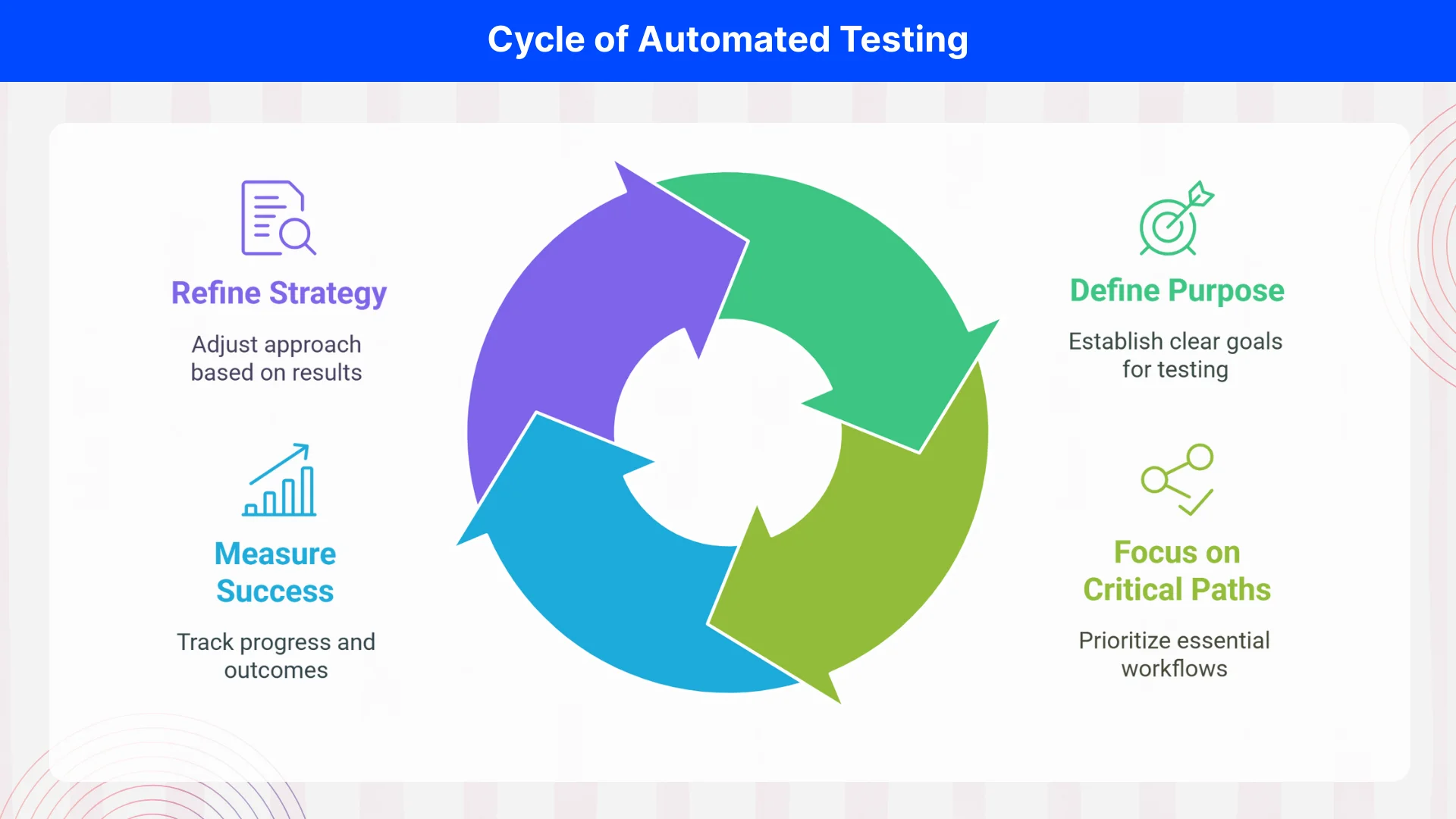

Solution: Be selective and deliberate. Focus on high-value, repetitive tests that deliver real returns, like regression suites, API validations, or critical user journeys (e.g., login or checkout flows). Start by asking: Does this test run often? Does it cover a stable feature? Will it save time long-term? Build a prioritized plan—maybe automate 20% of your tests that catch 80% of your defects. This disciplined approach is a cornerstone of the best practices for successful automated testing implementation. It keeps your efforts focused and avoids the disadvantages of automation testing, like bloated, unmanageable suites.

Automation isn’t a “set it and forget it” solution, though many teams treat it that way. Scripts aren’t immune to the chaos of evolving software—new features, UI updates, or backend changes can break them in an instant. Neglecting maintenance is a key factor leading to failures in automated test scripts, and it’s one of the sneakiest challenges in test automation. I’ve seen teams launch with enthusiasm, only to end up with a graveyard of outdated tests six months later, wondering why their pipeline’s a mess.

Consider a web app where a button’s ID changes from “submit-btn” to “save-btn.” If your script hardcodes that locator, it’s toast. Multiply that by dozens of tests, and you’ve got a maintenance nightmare—especially in fast-paced test automation projects.

Solution: Treat your test suite like production code. Schedule regular reviews—say, biweekly or after major releases—to update scripts. Refactor for resilience: use dynamic locators (e.g., XPath with partial matches) or parameterize inputs to adapt to changes. Invest in logging so you can trace failures quickly. For example, a team I advised cut maintenance time by 30% just by adding detailed failure logs—pinpointing issues became a breeze. Proactive upkeep is how to avoid mistakes in automated testing processes and keeps your automation humming along.

Flaky tests are the bane of automation—those infuriating scripts that pass one day and fail the next for no clear reason. They’re a classic automation problem, eroding trust in the system and forcing teams to waste hours chasing ghosts. Common causes? Timing issues (e.g., a page loads slower than expected), unstable test data (e.g., reusing an email that’s already taken), or brittle selectors that crumble in mobile automation testing scenarios.

I once saw a team wrestle with a flaky login test. It worked fine locally but failed in CI because the server lagged. The root issue? A hardcoded 2-second wait that didn’t account for real-world delays. Flakiness isn’t just annoying—it’s one of the challenges faced in automation testing that can sink morale and credibility.

Solution: Hunt down flakiness with intent. Replace static sleeps with explicit waits (e.g., wait until an element is clickable). Stabilize your environment—use dedicated test servers or mock APIs to control variables. For data, generate fresh inputs (e.g., timestamped emails like user+timestamp@example.com). In mobile testing, double-check device states—screen orientation, network conditions—before each run. Effective strategies to prevent automated testing failures hinge on reliability: if a test fails, it should signal a real defect, not a hiccup.

Get free Consultation and let us know your project idea to turn into an amazing digital product.

Picking the wrong tool or assuming your team can figure it out on the fly is a recipe for disaster. Automation testing challenges explode when the tool doesn’t match your stack—like using Selenium for a native mobile app—or when no one’s trained to wield it. This hits hard in specialized cases, like iOS solutions test results, where platform-specific quirks demand expertise.

I’ve seen teams jump into Appium for mobile testing without understanding its setup. Result? Weeks lost to debugging instead of testing. Skill gaps are just as bad—writing complex scripts requires coding chops, not just testing know-how. These are understanding the common mistakes in automated software testing at their core: preparation matters.

Solution: Do your homework. Match tools to your needs—Selenium for web, Appium for mobile, Postman for APIs—and test them in a pilot first. Invest in training: a few hours on a Udemy course or a workshop can save months of pain. For a team I consulted, a weekend bootcamp on Cypress turned rookies into contributors. Avoiding pitfalls in mobile automation testing scenarios starts with the right tools and skills in hand.

Here’s a subtle but pervasive challenge in automation: scripts without purpose. Some teams churn out tests because “automation is the goal,” not because they solve a problem. The result? Suites bloated with trivial checks—like verifying a footer link—while critical paths (e.g., payment processing) go untested. This lack of focus is one of the testing challenges that quietly undermines progress.

I recall a project where a team automated 200 low-priority UI validations. A month later, a major bug slipped through in the checkout flow—untested. They’d missed the forest for the trees.

Automation isn’t a lone wolf endeavor, but silos make it feel that way. When developers, testers, and product owners don’t talk, you get scripts that miss the mark—testing the wrong thing or ignoring real user needs. This disconnect is a recurring challenge automation teams face, especially in complex setups like cross-platform apps.

Once, a tester automated a feature based on outdated specs because the developer didn’t share updates. The scripts passed, but the feature failed in production. Collaboration gaps turn automation into guesswork.

Solution: Bridge the divide. Pull developers into script planning—they know the code’s quirks. Get testers to validate requirements with product owners. Hold quick syncs—15 minutes weekly—to align. For mobile projects, involve designers to flag UI shifts early. Collaboration is a linchpin in avoiding pitfalls in mobile automation testing scenarios and beyond—it ensures your tests reflect reality.

Automation isn’t a quick fix, but teams often expect it to be. They dive in, anticipating instant speed-ups, only to hit setup delays, learning curves, or initial failures. This impatience is one of the challenges in test automation that can kill momentum. I’ve seen managers push for full automation in a sprint, then balk when it took three to see gains.

Think of it like planting a tree: the roots need time to settle before you get shade. Rushing leads to half-baked solutions and disillusionment.

Solution:

Set realistic expectations. Start small—a single workflow or module—and measure progress (e.g., hours saved, defects caught). Scale gradually as confidence grows. A team I worked with automated login tests first, saw a 20% time drop in a month, then expanded. Patience and iteration are how to avoid mistakes in automated testing processes—slow and steady wins.

Share your project idea with us. Together, we’ll transform your vision into an exceptional digital product!

Automated testing holds immense potential, but it’s not a plug-and-play solution. The challenges faced in automation testing—over-automation, neglected maintenance, flaky scripts, skill gaps, unclear goals, silos, and impatience—trip up even seasoned teams. Yet, each has a fix. By recognizing these automation problems and applying practical solutions, you can transform a rocky start into a robust, reliable process.

The key? Work smarter, not just harder. Plan with purpose, maintain diligently, prioritize reliability, and lean on your team. Whether you’re tackling test automation projects or dodging the disadvantages of automation testing, a thoughtful approach pays off. You’ll end up with a suite that doesn’t just run—it delivers, minus the headaches.

Basic programming concepts, knowledge of testing principles, familiarity with automation tools, understanding of your application, debugging skills, and version control experience are essential.

There’s no single “best” tool. Choose based on your needs: Selenium for web, Appium for mobile, Postman for APIs. Consider your team’s skills and project requirements.

Begin with simple, stable test cases like login flows. Learn one tool well (like Selenium for web), take online courses, and gradually expand as you build confidence.

Avoid automating exploratory tests, rapidly changing features, one-off scenarios, and tests requiring human judgment. Focus on stable, repetitive tests that deliver long-term value.

Schedule regular maintenance after major releases or biweekly. Treat test code like production code—it needs consistent updating as your application evolves.

Tests break when they rely on hardcoded elements that change. Use more resilient locators, implement a maintainable framework, and update tests alongside application changes.

Focus on automating 20-30% of tests that cover critical functions and run frequently. Quality matters more than quantity—prioritize high-value scenarios over complete coverage.

Yes, if focused correctly. Start small with critical workflows, use lightweight tools, and prioritize tests with immediate ROI. Even small automation efforts can yield significant benefits.

Unit testing focuses on isolated code components and is usually written by developers. Automated testing covers broader functionality across multiple components and often simulates user behavior.

Expect 1-3 months before seeing significant benefits. Start with quick wins like smoke tests, then gradually expand. Automation is a long-term investment, not an overnight solution.

Software testing is a vital part of software development. It guarantees that software functions properly, is bug-free, and provides a seamless user experience. As new technology and trends emerge, testing methods are evolving.

In today’s fast-paced software development landscape, delivering high-quality applications quickly is a non-negotiable priority. However, achieving this balance between speed and quality often feels like chasing two rabbits at once. Enter the hybrid testing strategy—a powerful approach that combines the strengths of manual and automated testing to optimize results.

React Native has revolutionized mobile app development by allowing developers to build cross-platform applications with a single codebase.

Founder and CEO

Chief Sales Officer